I’m using Meta’s AI smart glasses as a better Humane Pin

The Meta Ray-Ban smart glasses now pack AI smarts, and I'm using them as a better version of the Humane Ai Pin

Humane’s Ai Pin is now available as one of the first AI hardware products. It’s been out for a few weeks now, hoping to take the best features of AI smartphones and put them in a standalone device. First impressions of the Humane Pin haven’t been great. They’ve been pretty bad, really. I (and many others), have wondered if they should just be apps instead. That said, I’m still keen on an alternative device for AI, so I can leave my smartphone behind. And I think I’ve found the perfect one: Meta’s Ray-Ban smart glasses.

I wear glasses everyday anyway, so I think this is the ideal form factor. The Meta Ray-Ban glasses have been on my face for a while, and I’ve gone as far as putting prescription lenses in them, so I can wear them all the time.

You might be able to achieve something similar for AI voice requests with a set of wireless earbuds, but then you’re wearing something extra. Plus, you’d miss out on all the other features that the Meta smart glasses offer, and they were already pretty good. But let me show you what it’s like to use the glasses now that they’ve got AI.

The ideal AI hardware device?

Let’s start things off with the non-AI stuff. The Meta Ray Ban glasses include down-firing speakers that fire straight into your lugs. Now, Meta reckons they’re quiet enough that other people won’t hear the audio. But it’s not as simple as that. If you’re sat at home in the quiet, someone could definitely hear the audio when sat next to you. When you’re out and about, however, they’re probably not going to hear anything.

These speakers are great for playing music from your phone, just like a set of wireless earbuds. But I’ve found their best use to be when taking phone calls. Talking to someone on the phone without earbuds or holding the handset to your ear feels like magic. It’s the flexibility of phone calls on earbuds, but with just your glasses! The microphones are also pretty good. And you can use both of these to speak to Meta’s assistant. It can send messages for you, read them aloud, and now has AI abilities (more on this later).

You also get the camera on the lenses, which is ideal for taking POV photos or videos. It’s only a 12MP snapper in 1080p quality, but I’m impressed with the image quality – and so were others. You can post straight to socials and even live stream, and everything syncs up to the app. When I’m using the glasses so personally, it’s a bit weird having Facebook watch what I do, but I’m used to this now. And I take the glasses off when I want to be extra careful. The camera flashes white when you take a picture or record video. But the snapper is pretty noticeable anyway, multiple people have commented on this.

But the AI smarts are what really make the Meta Ray Ban smart glasses special. They now pack in Meta AI, which is powered by the Llama model. It’s essentially an AI chatbot in voice format. You can ask it queries, and it’ll give you answers – and pretty much nothing is too much for it to handle. It’s also great at helping me brainstorm or find a fact I’d usually quickly Google. If I wanted help writing a headline or a line of code, it’ll tell me what to write.

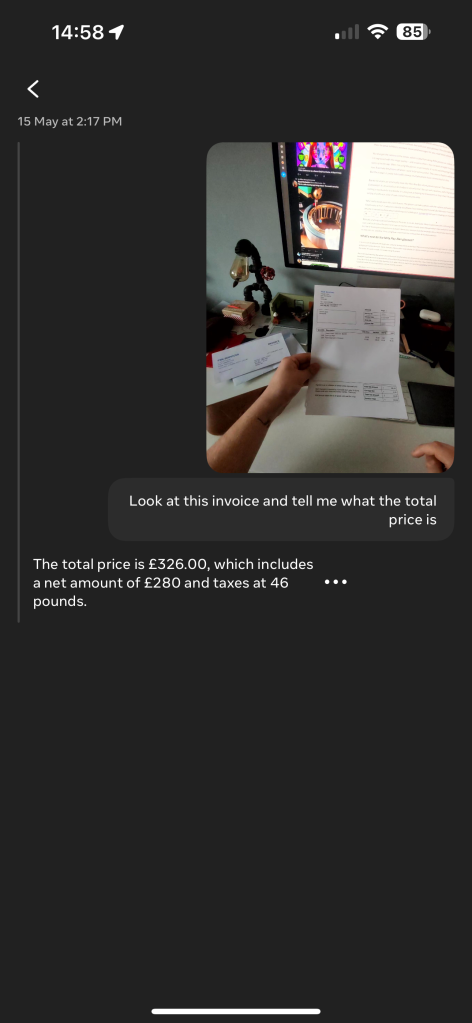

What really stands out is the vision feature. The glasses can take a photo with the camera and work out what you’re looking at. It’s a bit gimmicky at first – I asked it to identify the iPhone I was holding (and it could). But the more I’ve used it, the more useful it’s become. It can tell you facts about something you’re looking at, summarise text you’re reading, or translate signs into a language you understand.

Basically, anything you’d want the Humane Pin to do, it can do. And faster. Meta’s specs are a bit confusing when it comes to knowing your location. It knows the town I’m in, but can’t tell me what’s nearby (even though others have asked for recommendations nearby). And the AI model gets confused, since it doesn’t know your location, but the hardware does. Weird. But beyond this, I have no gripes, no requests, no… anything. I love using them, and I think this is the perfect AI hardware device.

What’s next for the Meta Ray-Ban glasses?

I’m very convinced by the Meta glasses, and plan to keep them on my face. There’s no complicated UI to get used to, and they’re only going to get better than this. While Humane tries to offer some display, it’s led to something clunky. Meta is all in on voice, and it’s for the better. If I want a screen, I’m happy using my phone.

Do I think the Meta Ray-Ban glasses are perfect with AI? Definitely not. Meta’s AI is more limited than other AI offerings out there. ChatGPT is perhaps the most developed, and would be the perfect use case for this. Plus, there’s the fact that I’m wearing Facebook on my face. Yuck. But, it can handle the majority of what I ask it to, and is very good at recognising aspects from the camera on-board. Couple this with the existing features, and you’re on to a winner.