What is Google Gemini (formerly Bard)? The Big G’s AI chatbot explained

Google's artificial chatbot is rolling out to the public

There’s been a lot of AI talk in the tech headlines, and Google is fully on board. The search brand launched its own AI-powered chatbot called Bard in March 2023, and has since renamed it to Gemini, after the Large Language Model (LLM) that powers its AI chatbot. Like similar tools, it promises a comprehensive conversation experience, all powered by artificial intelligence, with some very clever tricks thrown in for good measure.

Gemini is not the first foray into AI from Google. There are features in Google Docs and Gmail that use AI to predict the ends of sentences. Even the search box on the Google homepage uses AI to predict the end of search queries. There are Google Maps features, Android features, call screening, and dictation apps that all rely on artificial intelligence. The Big G is pretty familiar with all things AI.

But what exactly can Gemini do? And how does it all work? We get familiar with Google’s AI friend and explain everything that you need to know.

What exactly is Google Gemini?

Gemini is an AI-powered chatbot released by Google. It uses the brand’s PaLM 2 language model as a basis for its knowledge. And, unlike other AI chatbots, Google plugged it straight into the web so that it can access fresh, up-to-date information.

What does Gemini do with all this knowledge? It uses it to answer questions and queries in a conversational manner. Rather than typing in keywords for a search result, you can actually have a (real) conversation with the chatbot. You can get pretty detailed with these questions, too. Rather than more basic queries such as the year a celebrity was born in, Gemini can write out essays or provide programming examples, to name some of its capabilities.

Since LaMDA is a pretty extensive data model, Bard, upon its release, only got to set its peepers on a more restricted version. Released in an experimental phase, it had some fairly obvious flaws. Since then, it’s undergone various major updates and improvements, some of which have come from real-world feedback. This probably explains the rebranding from Bard to Gemini, as Google wants to make it clear that this latest version is so far ahead of its previous efforts that it warrants a new name.

What can Google Gemini do?

As we’ve lightly touched upon, Gemini can tackle much more complicated questions. Much like current search engines, you can ask the AI chatbot regular ol’ questions like “When was Stuff Magazine launched?”. You’ll receive accurate information from Bard’s extensive knowledge base. But it can go quite a few steps further than this.

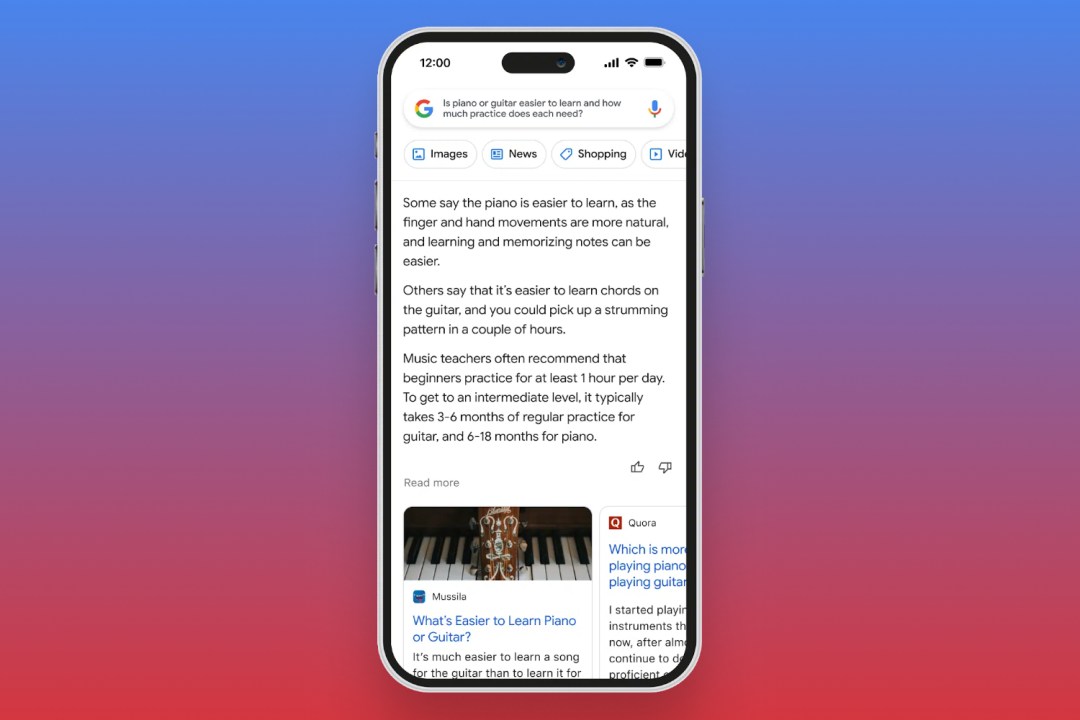

Gemini can also answer more open-ended or abstract queries, too. It’ll be able to answer questions like “Is the piano or guitar easier to learn?” with lengthy answers. How can it do this? Gemini draws data from its language model (which works just like a brain), and the web. This means it’s not only gathering objective facts, but also shared information through blogs and articles. In essence, it can sort of read and understand people’s opinions, which it can use to discuss queries in more detail. More recently, Google gave Bard to ability to use images as search queries rather than just written prompts, and with Gemini 1.5, it has another extremely impressive feature that sets it apart from the competition.

Now, you might shrug off the rebrand as a mere marketing attempt, but Google has recently shown off some new features which strongly suggest that Gemini is gunning for the top AI prize. Its most recent iteration, Google Gemini 1.5, has a gargantuan context window which, in essence, means it can handle vast queries while looking through a lot of information at once. It’s measured in tokens, and Gemini has a cool million at its disposal. For context, Open AI’s GPT-4 has 128000 tokens.

But what does that mean in the real world? Thankfully, Google has a rather useful, easy-to-understand example of Gemini’s power in action. The video below shows how Gemini can go through a video for specific things, based either on natural language questions, or even a crude drawing. It’s seriously impressive stuff, and the possibilities are vast, even at a consumer level. Google Photos, for example, can let you search for things like “food” or “cat videos”, but imagine asking it something like “show me a video clip of me from the time I fell over ice skating”, and having the precise clip served up in an instant. Impressive stuff indeed.

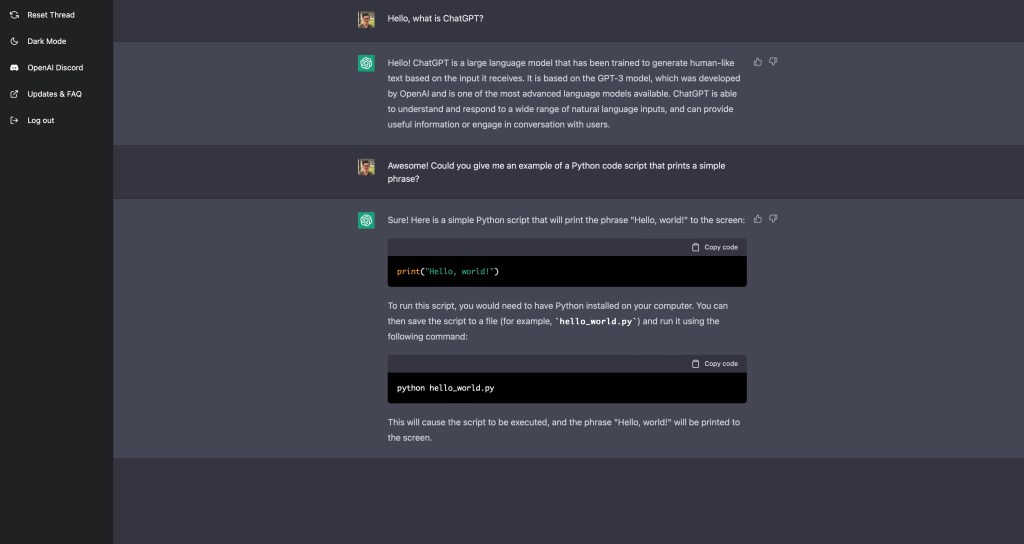

With other AI chatbots, we’ve seen them undertake a variety of different tasks. OpenAI’s ChatGPT has been able to write or plan full-length essays on historical topics, albeit to varying degrees of success and accuracy. It’s also been able to provide examples of code for programming related questions, and they actually work. It can almost feel like having a mini virtual assistant.

Plus, as we mentioned, Google has given Gemini free rein of the web, right from the main page of Google Search. This means it can access up-to-date knowledge, and can adapt as things change. It’ll distil large amounts of information into snippets that are easier to digest. Google also explained these snippets will provide multiple perspectives, using multiple websites as sources. It’ll even use forums and YouTube videos for further information from real people.

From these snippets, you can carry on with conversations, using suggested prompts at the bottom. They even integrate with shopping searches, allowing Gemini to do the hard work of searching on your behalf.

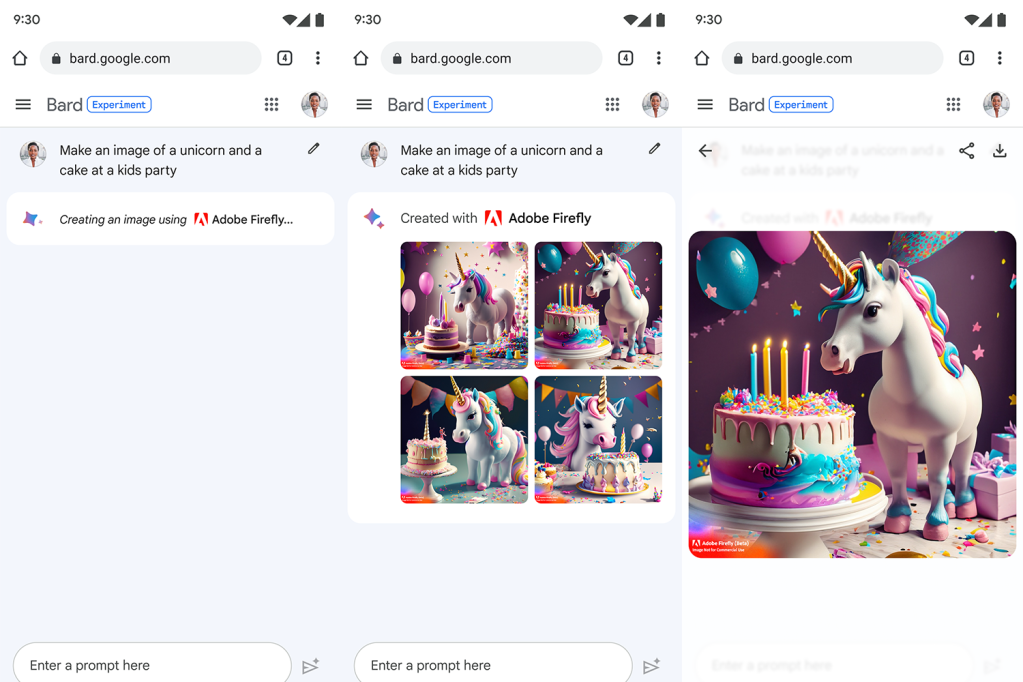

Also in the pipeline is a team-up with Adobe to allow image generation, with a tool dubbed Firefly. It’ll work similarly to DALL-E, Midjourney, and other tools, but lives right inside the regular Gemini chat interface. You’ll be able to type something into Gemini and have Firefly create an image for you. You can then take it onward to be edited in the Adobe Express app (and presumably Photoshop, too). The tool is currently in beta.

Google plans to make Gemini more visual in its responses and your prompts. It explains: “You’ll be able to ask it things like, “What are some must-see sights in New Orleans?” — and in addition to text, you’ll get a helpful response along with rich visuals to give you a much better sense of what you’re exploring.”

How can I get my hands on Google Gemini?

Google Gemini 1.0 is already available for you to try here. Version 1.5 is currently only available to business users and developers via the use of Google’s Vertex AI and AI Studio, though it will eventually replace Gemini 1.0 as standard. Once that happens, the regular version will have 128000 tokens at its disposal, while Gemini Pro will make use of the full one million.

How does Gemini compare to other AI chatbots?

On a basic level, Gemini is pretty similar to other AI-based chatbots. The core idea is the same – to engage in conversation with the extent of solving queries and questions. Currently, there aren’t too many similar chatbots. While ChatGPT has almost certainly been the most advanced, Google’s Gemini updates look to position the bot towards the top. Alternatives such as Bing AI, Replika, and ChatSonic remain popular, but bring up the rear with more basic formats.

These competitors also use extensive training models as a source for knowledge. However, they can only access the data in these training models – nothing further. For example, ChatGPT can only access information up to 2021. This means it can get crucial facts wrong in answers, since it can’t access updated information. Want to ask ChatGPT why you should buy the iPhone 14? No dice, we’re afraid. Gemini avoids this issue by plugging straight into the web, where it can access up-to-date data instantly.

But that’s not the only place that Google differs from these other AI chatbots. Google’s data model is far larger than those from competitors, including OpenAI. All the data that Google gathers (which is another can of worms entirely) is used in Gemini’s training model. And since Google is one of the largest and most sophisticated data gatherers, Gemini’s “brain” is going to be packed with even more valuable information.

On top of all this, Gemini is getting even more sophisticated. Its new features such as snippets in Search, image generation in Firefly, and update code generation (to name but a few) give the tool the widest range of features. Provide Google can ensure Gemini lives up to the demos, we might have to give the Big G the AI crown here.