Intel’s RealSense camera: gesture control and 3D scanning built into your next PC

Tablets, all-in-ones, laptops and tabtops of all shapes and sizes will gain depth perception and 3D scanning capabilities this year [updated with hands-on impressions]

Intel’s CES 2014 press conference was all about the potentially revolutionary future of ‘perceptual computing’.

The first step of realising an evolved, more intuitive computer is to add “senses” to the computing “brain”, says Intel’s Mooly Eden. Ultimately, they’ll be able to act more like we do, and we’ll be able to interact with them as we do with other people. (Eden pointed out that, according to Moore’s Law, the silicon chip would match the complexity of the human brain within 12 years. Sure, you’ll need software that can exploit the complexity, but it’s still rather sobering.)

The first “sense” Intel is adding is depth perception, via its new RealSense camera.

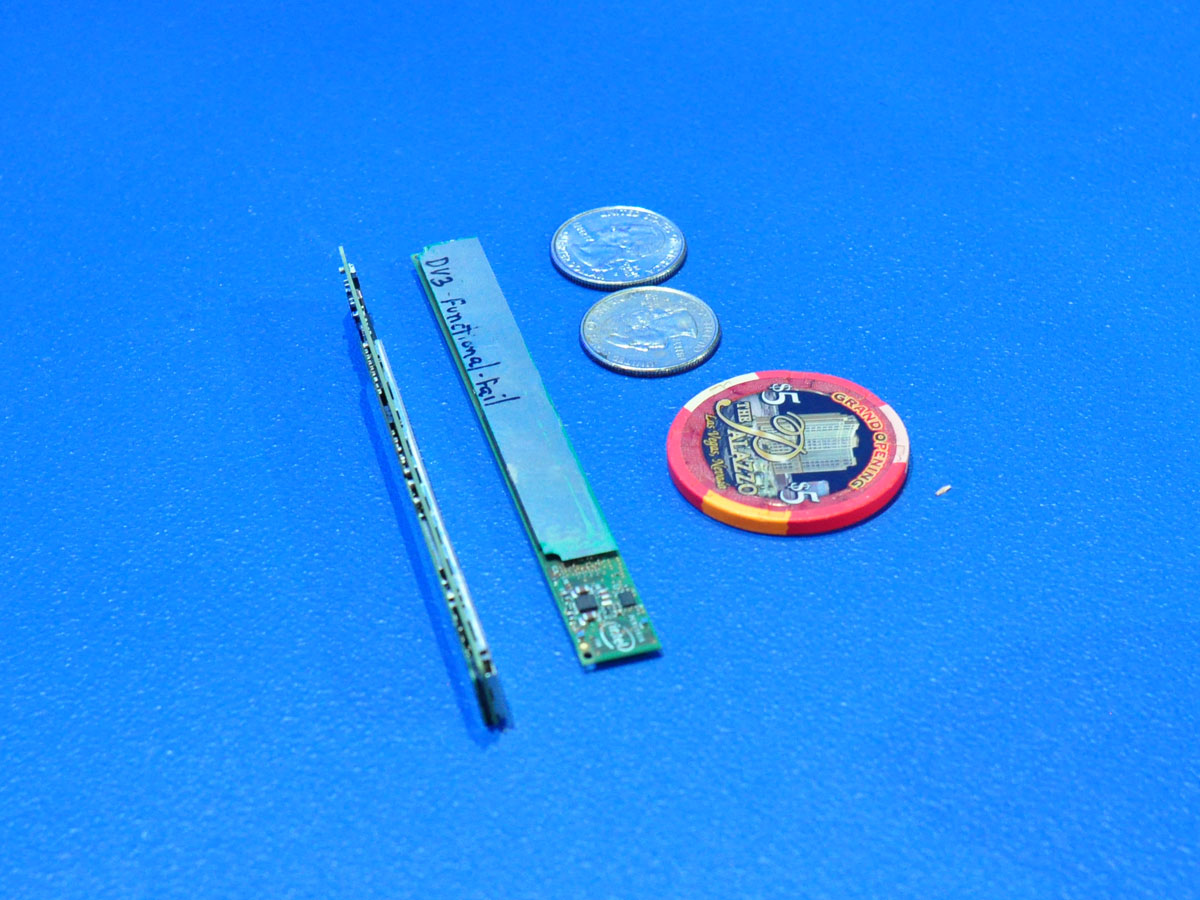

The RealSense camera unites a 1080p video camera module with a ‘best in class’ depth sensor, and manages to cram the lot into a sliver roughly the thickness of a Las Vegas poker chip.

It’s capable of recognising individual fingers, depth (to an as-yet unspecified degree) and facial expressions, and Intel’s representatives had it showing off a variety of Kinect-like gesture control methods that belie its slender proportions.

Laptops, tablets and all-in-ones from Lenovo, Asus and Dell graced the stage with jury-rigged RealSense cameras in their screen bezels – but this year many more from the likes of Fujitsu and Acer will ship with the camera built in.

Like Kinect, but wafer-thin

Most of RealSense’s capabilities aren’t exactly new – tinkerers have been plugging in and toying with Kinect on their Windows PCs for a few years now. But the quality of the first-gen RealSense appears considerably better than Kinect, despite its proportions, and there’s a trick that Kinect can’t replicate: RealSense is a 3D scanner.

At the CES keynote, Intel CEO Bryan Krzanich showed off how that works. You simply move the camera around the object to be scanned, capturing it from every relevant angle, and thanks to built-in movement sensors the RealSense maps out the entire environment in impressive detail.

Intel also demoed RealSense’s augmented reality prowess, showing off a game where a table in front of the computer became a grass-covered field with sheep grazing on it, and objects placed within it became a part of the playing surface. The young girl playing the game put her hand on the table, and the shepherd character climbed up her arm. Impressive stuff.

Also demoed was a real-time 3D video capture program that could differentiate between objects in the foreground and background, and apply filters to each accordingly. It’s also capable of real-time green screen effects, so you can pretend you’re in Vegas while Skyping your mum.

Much of the functionality was described by Eden Mooly as being ‘pre-alpha’, but it’s clear that Intel is fully invested in making RealSense happen this year. It’s also pledged to launch more RealSense hardware in future, including devices with enhanced natural language voice recognition capabilities.

Get ready to meet a computer more charismatic that you are.

Hands-off with Intel RealSense

First things first: RealSense isn’t quite fully baked yet. We don’t just say that because most of the test machines on Intel’s CES stand had ‘RealSense’ cameras with Creative Labs logos all over them perched atop the test machines, nor because the gadgets with integrated RealSense cameras had clearly been hacked at with scalpels, but because, for now, it works in a hit-and-miss way.

Although on paper capable of recognising 10 digits simultaneously, the test machines sometimes struggled to discern our fingers from our hands, or to recognise the way in which we were moving. This resulted in unpredictable on-screen behaviour and some frustrating gesture gaming experiences. Anyone who has tried to play Kinect from too close in or in poor light will be familiar with the problems we encountered.

But when RealSense works, it’s hugely impressive. We tried out a realtime synthesizer that allowed us to constract elaborate tunes with gestures and prods. The camera could discern how close our fingers were to the screen: when we ‘reached in’ through the on-screen water, we could move and set up new instruments around the UI and change the tempo by pointing up and down; when our hands were back out of the water we could just get down to playing.

Elsewhere on the Intel stand, there was a RealSense-equipped 3D workstation that allowed us to manipulate an Intel doll in midair with our fingertips, and a brilliant pinball game where you bash the ball with a real paddle held in your hands.

It’s mind-blowing that any camera can achieve this in the poor lighting conditions of the CES show floor, let alone a slim sliver that will soon be integrated into consumer tablets, all-in-ones and laptops. It’s going to be very interesting to see how the finish product performs later this year.