These new iPhone features are our first real look at iOS 19

There are a slate of new accessibility features for the iPhone, which will release as part of the major free iOS 19 update later this year

Apple is working on its next major software update for the latest iPhones – iOS 19. We won’t be seeing the free major update for a few more weeks, when it debuts at Apple’s developers conference. But the tech giant has given us our first look at the upcoming software with the release of these new iPhone accessibility features.

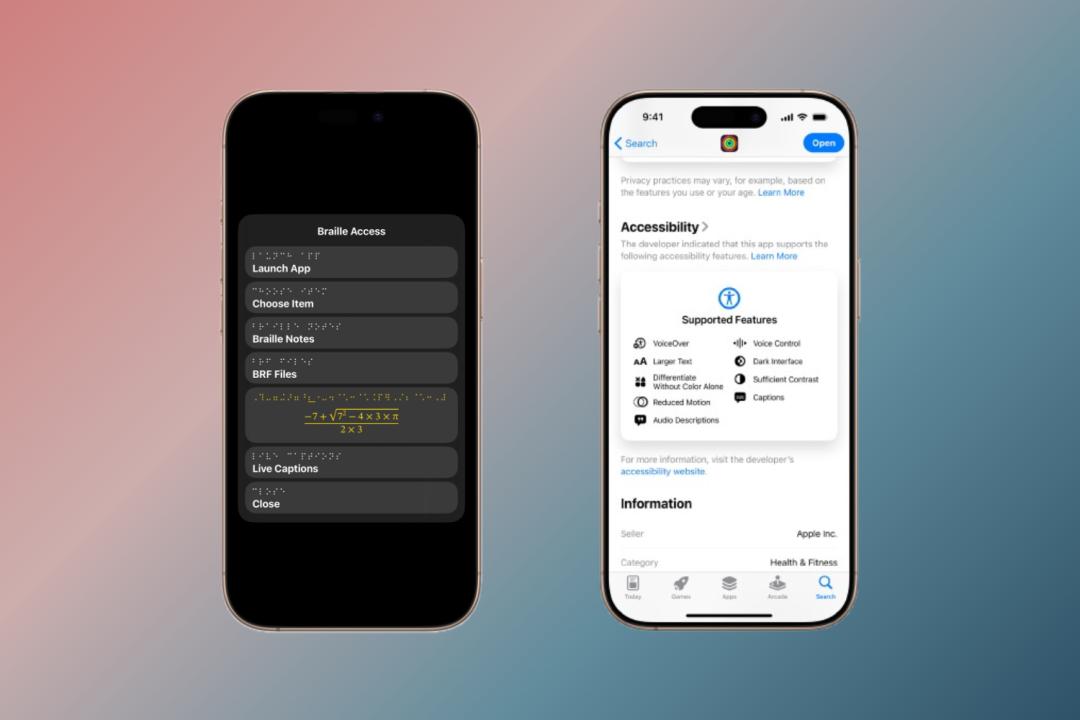

First up, we’ve got Accessibility Nutrition Labels. It sounds like something you’d find on food packaging, but it’s actually a new section on the App Store that lets users know whether an app is accessible to them – before downloading it. So if you use VoiceOver, need high contrast or larger text, you can now suss that out up front. It’s similar to the Privacy Labels we’ve had for a few years.

Magnifier is also making the jump to the Mac. It connects with your webcam or even your iPhone’s camera, meaning you can read that tiny print on a whiteboard across the room or inspect your cat’s whiskers in full HD if that’s your thing. There’s even a new Accessibility Reader built in that turns real-world text into a customisable, easy-to-read format. Vehicle Motion Cues are also coming to Mac, which use dots on the screen to help you avoid motion sickness.

Braille Access basically turns iPhones, iPads, and Macs into full-on braille note-takers. It supports Nemeth Braille for maths and science, reads BRF files, and even pairs up with Live Captions to transcribe directly onto braille displays. Then there’s Accessibility Reader, which turns any app or bit of text into something your eyes can actually focus on. You can customise fonts, colours, and spacing. It’s designed for folks with dyslexia or low vision, but anyone who hates Comic Sans might get some use out of it too.

Your Apple Watch is getting some attention as well, with Live Listen now bringing real-time captions straight to your wrist. It’s basically turning the Watch into a control centre for your iPhone’s microphone, so you can get subtitles on the fly. And Apple Vision Pro’s accessibility toolkit is also getting a bit beefier, with enhanced Zoom and a new Live Recognition feature that uses machine learning to describe your surroundings. And developers can now use the main camera to create apps that offer visual interpretation.

Other updates include improved Personal Voice so it now takes only ten phrases to generate a custom voice, Name Recognition in Sound Recognition, and improved Head Tracking controls. Plus, Music Haptics on iPhone is getting new customisation options, and you can now share your accessibility settings with another device temporarily.

This annual accessibility drop is usually Apple’s sneak preview of what’s cooking behind the scenes, and this year is no different. These features offer a very real look at iOS 19’s direction. Expect iOS 19 to be unveiled at WWDC 2025 in June, with a full release in September. We’re hoping for the (final) completion of Apple Intelligence to land alongside the long-rumoured redesign.